【深度学习】C++ onnx Yolov8 目标检测推理

- 导出onnx模型

- 代码

- onnx_detect_infer.h

- onnx_detect_infer.cpp

- main.cpp

- CMAKELIST

导出onnx模型

python 中导出

from ultralytics import YOLO

# Load the YOLOv8 model

model = YOLO("best.pt")

# # Export the model to ONNX format

model.export(format="onnx", dynamic=False, simplify=True, imgsz = (640,640), opset=12, half=False, int8=False) # creates 'yolov8n.onnx'

代码

onnx_detect_infer.h

#include <iostream>

#include <opencv2/opencv.hpp>

#include <onnxruntime_cxx_api.h>

#include <numeric>

#define _PRINT true

namespace det

{

struct OutputDet

{

int id;

float confidence;

cv::Rect box;

};

struct param

{

int batchSize = 1;

int netWidth = 640; //ONNX-net-input-width

int netHeight = 640; //ONNX-net-input-height

bool dynamicShape = true; //onnx support dynamic shape

float classThreshold = 0.25;

float nmsThrehold= 0.45;

float maskThreshold = 0.5;

};

}

class detectModel

{

public:

detectModel():m_ortMemoryInfo(Ort::MemoryInfo::CreateCpu(OrtAllocatorType::OrtDeviceAllocator, OrtMemType::OrtMemTypeCPUOutput)) {};

~detectModel()

{

delete m_ortSession;

m_inputNodeNames.clear();

m_outputNodeNames.clear();

m_inputTensorShape.clear();

m_outputTensorShape.clear();

};

bool readModel(const std::string& modelPath, bool isCuda=false, int cudaId=0, bool warmUp=true);

bool onnxDetect(cv::Mat& srcImg, std::vector<det::OutputDet>& output);

bool onnxBatchDetect(std::vector<cv::Mat>& srcImgs, std::vector<std::vector<det::OutputDet>>& output);

void drawPred(cv::Mat& img, std::vector<det::OutputDet> result,

std::vector<std::string> classNames, std::vector<cv::Scalar> color);

// parameter.

det::param m_param = {1,640,640,true,0.25,0.45,0.5};

public:

std::vector<std::string> m_className = {"blackPoint"};

private:

// ort parameter

Ort::Env m_ortEnv = Ort::Env(OrtLoggingLevel::ORT_LOGGING_LEVEL_ERROR, "Yolov8n"); // set up your log level and project name.

Ort::MemoryInfo m_ortMemoryInfo;

Ort::SessionOptions m_ortSessionOptions = Ort::SessionOptions(); // init. default do not need any parameter.

Ort::Session* m_ortSession = nullptr;

std::shared_ptr<char> m_inputName, m_output_name;

std::vector<char*> m_inputNodeNames;

std::vector<char*> m_outputNodeNames;

size_t m_inputNodesNum = 0;

size_t m_outputNodesNum = 0;

ONNXTensorElementDataType m_inputNodeDataType;

ONNXTensorElementDataType m_outputNodeDataType;

std::vector<int64_t> m_inputTensorShape;

std::vector<int64_t> m_outputTensorShape;

private:

template<typename T>

T vectorProduct(const std::vector<T>& v)

{

return std::accumulate(v.begin(), v.end(), 1, std::multiplies<T>());

}

int preProcessing(const std::vector<cv::Mat>& srcImgs,

std::vector<cv::Mat>& outSrcImgs,

std::vector<cv::Vec4d>& params);

void letterBox(const cv::Mat& image, cv::Mat& outImage, cv::Vec4d& params, const cv::Size& newShape = cv::Size(640, 640),

bool autoShape = false, bool scaleFill=false, bool scaleUp=true, int stride= 32, const cv::Scalar& color = cv::Scalar(114,114,114));

bool checkPath(const std::string path);

};

onnx_detect_infer.cpp

#include "onnx_detect_infer.h"

#include <fstream>

using namespace std;

using namespace Ort;

using namespace cv;

using namespace det;

bool detectModel::checkPath(const std::string path)

{

string tempPath = path;

ifstream f(tempPath.c_str());

return f.good();

}

bool detectModel::readModel(const std::string &modelPath, bool isCuda, int cudaId, bool warmUp)

{

if(m_param.batchSize < 1)

{

m_param.batchSize = 1;

}

try

{

if (!checkPath(modelPath))

{

if(_PRINT)

{

cout << "your model path isn't corrent. check up : " << modelPath << endl;

}

return false;

}

// check up whether there is a gpu device.

std::vector<std::string> available_providers = GetAvailableProviders();

auto cuda_available = std::find(available_providers.begin(), available_providers.end(), "CUDAExecutionProvider");

// using cpu threads.

// m_ortSessionOptions.SetIntraOpNumThreads(4);

// m_ortSessionOptions.SetExecutionMode(ORT_SEQUENTIAL);

// gpu mode set up.

if (isCuda && (cuda_available == available_providers.end()))

{

if(_PRINT)

{

std::cout << "Your ORT build without GPU. Change to CPU." << std::endl;

std::cout << "************* Infer model on CPU! *************" << std::endl;

}

}

else if (isCuda && (cuda_available != available_providers.end()))

{

if(_PRINT)

{

std::cout << "************* Infer model on GPU! *************" << std::endl;

}

#if ORT_API_VERSION < ORT_OLD_VISON

OrtCUDAProviderOptions cudaOption;

cudaOption.device_id = cudaId;

m_ortSessionOptions.AppendExecutionProvider_CUDA(cudaOption);

#else

OrtStatus* status = OrtSessionOptionsAppendExecutionProvider_CUDA(m_ortSessionOptions, cudaId);

#endif

}

else

{

if(_PRINT)

{

std::cout << "************* Infer model on CPU! *************" << std::endl;

}

}

// GraphOptimizationLevel::ORT_DISABLE_ALL -> Disables all optimizations

// GraphOptimizationLevel::ORT_ENABLE_BASIC -> Enables basic optimizations

// GraphOptimizationLevel::ORT_ENABLE_EXTENDED -> Enables basic and extended optimizations

// GraphOptimizationLevel::ORT_ENABLE_ALL -> Enables all available optimizations including layout

// I have tested all modes, and ort_enable_all had fastest speed.

m_ortSessionOptions.SetGraphOptimizationLevel(GraphOptimizationLevel::ORT_ENABLE_ALL); // ORT_ENABLE_ALL

#ifdef _WIN32

std::wstring model_path(modelPath.begin(), modelPath.end());

m_ortSession = new Ort::Session(m_ortEnv, model_path.c_str(), m_ortSessionOptions);

#else

m_ortSession = new Ort::Session(m_ortEnv, modelPath.c_str(), m_ortSessionOptions);

#endif

Ort::AllocatorWithDefaultOptions allocator;

//init input

m_inputNodesNum = m_ortSession->GetInputCount();

#if ORT_API_VERSION < ORT_OLD_VISON

m_inputName = _OrtSession->GetInputName(0, allocator);

m_inputNodeNames.push_back(m_inputName);

#else

m_inputName = std::move(m_ortSession->GetInputNameAllocated(0, allocator));

m_inputNodeNames.push_back(m_inputName.get());

#endif

Ort::TypeInfo inputTypeInfo = m_ortSession->GetInputTypeInfo(0);

auto input_tensor_info = inputTypeInfo.GetTensorTypeAndShapeInfo();

m_inputNodeDataType = input_tensor_info.GetElementType();

m_inputTensorShape = input_tensor_info.GetShape();

if (m_inputTensorShape[0] == -1)

{

m_param.dynamicShape = true;

m_inputTensorShape[0] = m_param.batchSize;

}

if (m_inputTensorShape[2] == -1 || m_inputTensorShape[3] == -1) {

m_param.dynamicShape = true;

m_inputTensorShape[2] = m_param.netHeight;

m_inputTensorShape[3] = m_param.netWidth;

}

// init output

m_outputNodesNum = m_ortSession->GetOutputCount();

#if ORT_API_VERSION < ORT_OLD_VISON

m_output_name = _OrtSession->GetOutputName(0, allocator);

m_outputNodeNames.emplace_back(m_output_name);

#else

m_output_name = std::move(m_ortSession->GetOutputNameAllocated(0, allocator));

m_outputNodeNames.emplace_back(m_output_name.get());

#endif

Ort::TypeInfo type_info_output0(nullptr);

type_info_output0 = m_ortSession->GetOutputTypeInfo(0); //output0

auto tensor_info_output0 = type_info_output0.GetTensorTypeAndShapeInfo();

m_outputNodeDataType = tensor_info_output0.GetElementType();

m_outputTensorShape = tensor_info_output0.GetShape();

//warm up

if (isCuda && warmUp)

{

//draw run

size_t input_tensor_length = vectorProduct(m_inputTensorShape);

float* temp = new float[input_tensor_length];

std::vector<Ort::Value> input_tensors;

std::vector<Ort::Value> output_tensors;

input_tensors.push_back(Ort::Value::CreateTensor<float>(

m_ortMemoryInfo, temp, input_tensor_length, m_inputTensorShape.data(),

m_inputTensorShape.size()));

for (int i = 0; i < 3; ++i)

{

output_tensors = m_ortSession->Run(Ort::RunOptions{ nullptr },

m_inputNodeNames.data(),

input_tensors.data(),

m_inputNodeNames.size(),

m_outputNodeNames.data(),

m_outputNodeNames.size());

}

delete[]temp;

}

}

catch (const std::exception&)

{

return false;

}

return true;

}

bool detectModel::onnxBatchDetect(std::vector<cv::Mat> &srcImgs, std::vector<std::vector<OutputDet> > &output)

{

vector<Vec4d> params;

vector<Mat> input_images;

cv::Size input_size(m_param.netWidth, m_param.netHeight);

// preProcessing.

preProcessing(srcImgs, input_images, params);

// [0~255] --> [0~1]; BGR2RGB.

Mat blob = cv::dnn::blobFromImages(input_images, 1 / 255.0, input_size, Scalar(0,0,0), true, false);

// get output result.

int64_t input_tensor_length = vectorProduct(m_inputTensorShape);

std::vector<Ort::Value> input_tensors;

std::vector<Ort::Value> output_tensors;

input_tensors.push_back(Ort::Value::CreateTensor<float>(m_ortMemoryInfo, (float*)blob.data,

input_tensor_length, m_inputTensorShape.data(),

m_inputTensorShape.size()));

output_tensors = m_ortSession->Run(Ort::RunOptions{ nullptr },

m_inputNodeNames.data(), input_tensors.data(), m_inputNodeNames.size(),

m_outputNodeNames.data(), m_outputNodeNames.size() );

//post-process

int net_width = m_className.size() + 4;

float* all_data = output_tensors[0].GetTensorMutableData<float>(); // outputs of the first picture.

m_outputTensorShape = output_tensors[0].GetTensorTypeAndShapeInfo().GetShape(); // output dimension [1, 84, 8400]

int64_t one_output_length = vectorProduct(m_outputTensorShape) / m_outputTensorShape[0]; // the length of the memory of the output of a picture 8400*84

for (int img_index = 0; img_index < srcImgs.size(); ++img_index)

{

Mat output0 = Mat(Size((int)m_outputTensorShape[2], (int)m_outputTensorShape[1]), CV_32F, all_data).t(); // [1, 84 ,8400] -> [1, 8400, 84]

all_data += one_output_length; // point to the adress of the picture.

float* pdata = (float*)output0.data; // [x,y,w,h,class1,class2.....class80]

int rows = output0.rows;

// predict box.

vector<int> class_ids;

vector<float> confidences;

vector<Rect> boxes;

for (int r=0; r<rows; ++r) // can not support yolov5, if use v5, need to change it

{

Mat scores(1, m_className.size(), CV_32F, pdata + 4); // score

Point classIdPoint;

double max_class_soces;

minMaxLoc(scores, 0, &max_class_soces, 0, &classIdPoint);

max_class_soces = (float)max_class_soces;

if (max_class_soces >= m_param.classThreshold)

{

// rect [x,y,w,h]

float x = (pdata[0] - params[img_index][2]) / params[img_index][0]; //x

float y = (pdata[1] - params[img_index][3]) / params[img_index][1]; //y

float w = pdata[2] / params[img_index][0]; //w

float h = pdata[3] / params[img_index][1]; //h

int left = MAX(int(x - 0.5 *w +0.5), 0);

int top = MAX(int(y - 0.5*h + 0.5), 0);

class_ids.push_back(classIdPoint.x);

confidences.push_back(max_class_soces);

boxes.push_back(Rect(left, top, int(w + 0.5), int(h + 0.5)));

}

pdata += net_width; // next box

}

// nms process

vector<int> nms_result;

cv::dnn::NMSBoxes(boxes, confidences, m_param.classThreshold, m_param.nmsThrehold, nms_result); // 还需要classThreshold?

cv::Rect holeImgRect(0, 0, m_param.netWidth, m_param.netHeight);

// get predict output.

vector<OutputDet> temp_output;

for (size_t i=0; i<nms_result.size(); ++i)

{

int idx = nms_result[i];

OutputDet result;

result.id = class_ids[idx];

result.confidence = confidences[idx];

result.box = boxes[idx] & holeImgRect;

temp_output.push_back(result);

}

output.push_back(temp_output);

}

if (output.size())

return true;

else

return false;

}

bool detectModel::onnxDetect(cv::Mat &srcImg, std::vector<OutputDet> &output)

{

vector<Mat> input_data = {srcImg};

vector<vector<OutputDet>> temp_output;

if(onnxBatchDetect(input_data, temp_output))

{

output = temp_output[0];

return true;

}

else return false;

}

int detectModel::preProcessing(const std::vector<cv::Mat> &SrcImgs,

std::vector<cv::Mat> &OutSrcImgs,

std::vector<cv::Vec4d> ¶ms)

{

OutSrcImgs.clear();

Size input_size = Size(m_param.netWidth, m_param.netHeight);

for (size_t i=0; i<SrcImgs.size(); ++i)

{

Mat temp_img = SrcImgs[i];

Vec4d temp_param = {1,1,0,0};

if (temp_img.size() != input_size)

{

Mat borderImg;

letterBox(temp_img, borderImg, temp_param, input_size, false, false, true, 32);

OutSrcImgs.push_back(borderImg);

params.push_back(temp_param);

}

else

{

OutSrcImgs.push_back(temp_img);

params.push_back(temp_param);

}

}

int lack_num = m_param.batchSize - SrcImgs.size();

if (lack_num > 0)

{

Mat temp_img = Mat::zeros(input_size, CV_8UC3);

Vec4d temp_param = {1,1,0,0};

OutSrcImgs.push_back(temp_img);

params.push_back(temp_param);

}

return 0;

}

void detectModel::letterBox(const cv::Mat& image, cv::Mat& outImage, cv::Vec4d& params, const cv::Size& newShape,

bool autoShape, bool scaleFill, bool scaleUp, int stride, const cv::Scalar& color)

{

// get smaller scale size.

cv::Size shape = image.size();

float r = std::min((float)newShape.height / (float)shape.height,

(float)newShape.width / (float)shape.width);

if (!scaleUp)

r = std::min(r, 1.0f);

// source image size

float ratio[2]{r,r};

int new_un_pad[2] = { (int)std::round((float)shape.width * r), (int)std::round((float)shape.height * r)};

// detect the plexs of the size of detect object.

auto dw = (float)(newShape.width - new_un_pad[0]);

auto dh = (float)(newShape.height - new_un_pad[1]);

if (autoShape)

{

dw = (float)((int)dw % stride);

dh = (float)((int)dh % stride);

}

else if (scaleFill)

{

dw = 0.0f;

dh = 0.0f;

new_un_pad[0] = newShape.width;

new_un_pad[1] = newShape.height;

ratio[0] = (float)newShape.width / (float)shape.width;

ratio[1] = (float)newShape.height / (float)shape.height;

}

dw /= 2.0f;

dh /= 2.0f;

// resize

if (shape.width != new_un_pad[0] && shape.height != new_un_pad[1])

{

cv::resize(image, outImage, cv::Size(new_un_pad[0], new_un_pad[1]));

}

else{

outImage = image.clone();

}

// padding, make every pictures have the same size.

int top = int(std::round(dh - 0.1f));

int bottom = int(std::round(dh + 0.1f));

int left = int(std::round(dw - 0.1f));

int right = int(std::round(dw + 0.1f));

params[0] = ratio[0]; // scale of width

params[1] = ratio[1]; // scale of height

params[2] = left; // the number of padding from left to right

params[3] = top; //the number of padding from top to bottom

cv::copyMakeBorder(outImage, outImage, top, bottom, left, right, cv::BORDER_CONSTANT, color);

}

void detectModel::drawPred(cv::Mat& img, std::vector<OutputDet> result,

std::vector<std::string> classNames,

std::vector<cv::Scalar> color)

{

for (size_t i=0; i<result.size(); i++)

{

int left,top;

left = result[i].box.x;

top = result[i].box.y;

// rectangle the object.

rectangle(img, result[i].box,color[result[i].id], 2, 8);

// put text.

string label = to_string(result[i].confidence); //classNames[result[i].id] + ":" + to_string(result[i].confidence);

int baseLine;

Size labelSize = getTextSize(label, FONT_HERSHEY_SIMPLEX, 0.5, 1, &baseLine);

top = max(top, labelSize.height);

putText(img, label, Point(left, top), FONT_HERSHEY_SIMPLEX, 1, color[result[i].id], 2);

}

}

main.cpp

#include <iostream>

#include <opencv2/opencv.hpp>

#include "onnx_detect_infer.h"

#include <sys/time.h>

using namespace std;

using namespace cv;

using namespace cv::dnn;

int main()

{

string model_path = "/home/xiaoxin/Documents/ultralytics-main/last.onnx";

detectModel yolov8;

if (!yolov8.readModel(model_path))

{

return -1;

}

yolov8.m_param.batchSize = 1;

yolov8.m_param.netWidth = 640;

yolov8.m_param.netHeight = 640;

yolov8.m_param.dynamicShape = false;

yolov8.m_param.classThreshold = 0.25;

yolov8.m_param.nmsThrehold= 0.45;

yolov8.m_param.maskThreshold = 0.5;

// random color

vector<Scalar> color;

srand((time(0)));

for (int i=0; i<80; i++)

{

int b = rand() % 256;

int g = rand() % 256;

int r = rand() % 256;

color.push_back(Scalar(b,g,r));

}

string inputPath = "/home/xiaoxin/Documents/ultralytics-main/datasets/Tray/labelImg";

vector<String> vPaths;

glob(inputPath,vPaths,true);

for(int i = 0; i < vPaths.size(); ++i)

{

Mat frame = imread(vPaths[i], 1);

struct timeval t1, t2;

double timeuse;

vector<det::OutputDet> reuslt;

gettimeofday(&t1, NULL);

for(int i = 0; i < 50 ; i++)

{

bool find = yolov8.onnxDetect(frame, reuslt);

}

gettimeofday(&t2, NULL);

yolov8.drawPred(frame, reuslt, yolov8.m_className, color);

timeuse = (t2.tv_sec - t1.tv_sec) +

(double)(t2.tv_usec -t1.tv_usec)/1000000; //s

cout << timeuse*1000 << endl;

resize(frame, frame, Size(0,0), 3, 3);

imshow("result", frame);

waitKey(0);

}

return 0;

}

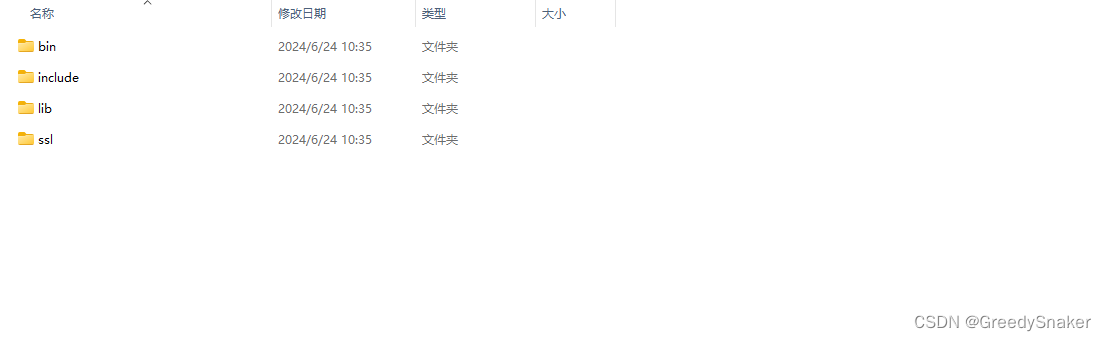

CMAKELIST

CMAKE_MINIMUM_REQUIRED(VERSION 3.0.0)

project(YOLOv8)

SET (OpenCV_DIR path/to/opencv/build) #opencv root

#SET (ONNXRUNTIME_DIR path/to/onnxruntime)

Set(ONNXRUNTIME_DIR ${PROJECT_SOURCE_DIR}/onnxruntime-linux-x64-gpu-1.18.0)

FIND_PACKAGE(OpenCV REQUIRED)

#include_directories("")

ADD_EXECUTABLE(YOLOv8 main.cpp onnx_detect_infer.cpp)

SET(CMAKE_CXX_STANDARD 14)

SET(CMAKE_CXX_STANDARD_REQUIRED ON)

TARGET_INCLUDE_DIRECTORIES(YOLOv8 PRIVATE "${ONNXRUNTIME_DIR}/include")

TARGET_COMPILE_FEATURES(YOLOv8 PRIVATE cxx_std_14)

TARGET_LINK_LIBRARIES(YOLOv8 ${OpenCV_LIBS})

if (WIN32)

TARGET_LINK_LIBRARIES(YOLOv8 "${ONNXRUNTIME_DIR}/lib/onnxruntime.lib")

endif(WIN32)

if (UNIX)

TARGET_LINK_LIBRARIES(YOLOv8 "${ONNXRUNTIME_DIR}/lib/libonnxruntime.so")

endif(UNIX)